Introduction

A Graphical Processing Unit (GPU) is a processor that is made up of smaller, more specialized cores. Originally designed to accelerate graphical calculations, GPUs were developed to work in parallel processing, which means that they are able to process data simultaneously in order to complete tasks more quickly. In other words, GPUs are able to perform rapid mathematical calculations which is very well suited for processing images, animations and videos. In recent times people started to use GPUs for other applications as well, like machine learning and creative production. The calculations of these operations are very similar to the ones for processing graphics. Deep learning algorithms require thousands of the same type of calculations, often matrix multiplications. A GPU is able to divide these calculations amongst the hundreds of cores it has, which gives a massive speed boost in processing these tasks.

Types of GPU

GPUs come as two types: integrated and discrete. An integrated GPU is embedded onto the CPU chip. This type of GPU does not have its own dedicated memory, instead it shares its memory with the CPU. An integrated GPU is not as powerful as a dedicated GPU, but for things like browsing the web, or streaming shows they are adequate. Apples new M chips use an integrated GPU:

A discrete GPU is separated from the processor. They have their own dedicated memory and so they don’t rely on the RAM of your system. The discrete GPUs can be divided further into gaming GPUs and workstation GPUs. The gaming GPUs are the ones that are easily accessible for consumers as they are designed, as the name suggests, for gaming. Thus their main task is to produce a high frame rate. A workstation GPU is designed for tasks like 3D modeling, video editing, machine learning etc. it should be noted though that the new-gen gaming GPUs are able to executet these professional tasks very well too.

What’s the difference between a CPU and a GPU?

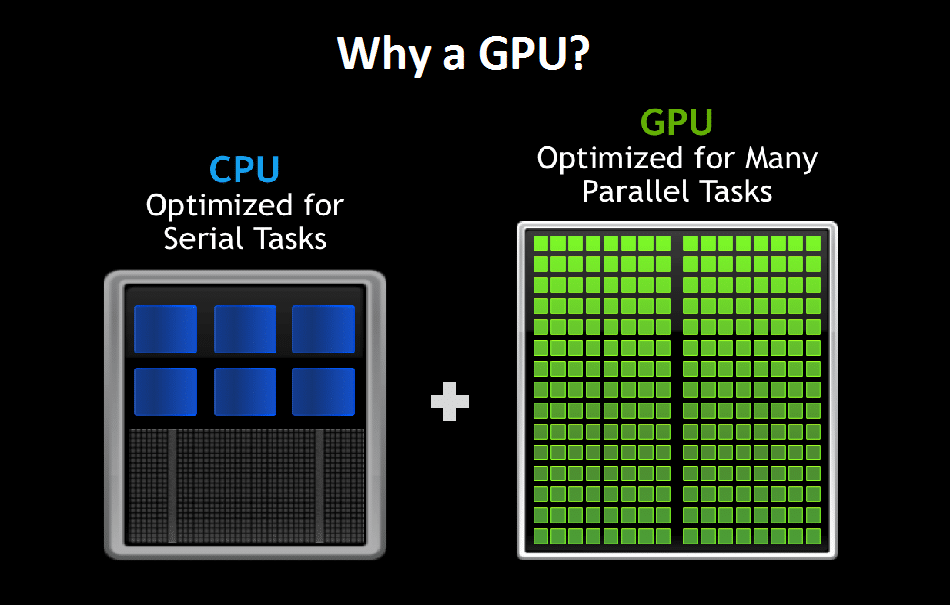

You can look at the CPU in your computer as the brain of your computer, providing the computer with the instructions and processing power to do its work. In order to give these instructions, a CPU needs to be able to ‘think’ fast, which is why CPUs are equipped with a lot of cache memory. CPUs are equipped with several cores, most consumer ones have between two and twelve cores. These cores make use of cache memory to reduce latency, enabling the CPU to process the instructions provided by applications quicker. This makes a CPU good at serial processing.

A GPU, on the other hand, is designed to handle graphics-related work like graphics, effects, and videos. In order to do this effectively, a GPU needs to be able to handle a lot of data at the same time, i.e. the GPU needs to have a high throughput. When you’re gaming, for example, you want to maximize your FPS to enable a smoother gaming experience. GPUs do this by breaking problems into thousands or sometimes millions of separate tasks and working them out at once. This is called parallel processing, and it is why GPUs are equipped with hundreds or sometimes even thousands of more specialized cores, which gives GPUs the high throughput they need.

Have a look at the image below, which shows the architecture of both the CPU and GPU. You can see that a CPU has a lot more cache as opposed to the GPU. This reduces the CPU’s need to access underlying, slower storage like a hard drive and thus reduces latency, i.e it enables the CPU to think faster. The image also clearly shows that a GPU consists of a lot more cores than a CPU (The green squares). These cores are smaller than the cores used in a CPU, but they enable the GPU to perform similar calculations across one data stream at the same time.

You can compare the CPU to an assistant, able to do anything you ask of it: clean your house, cook you a nice meal, or get you something to drink. The number of chores a CPU can do at the same time is limited to the number of cores it has. A CPU with six cores, for example, can handle six chores at once. A GPU can be compared to a colony of ants: each ant may only know how to do a couple of things like gathering food or taking care of their young and queen, but they are able to do these things with great efficiency and all at the same time.

In short:

- A CPU is a more general purpose chip, able to do lots of things with a low latency, but the lack of parallelisation limits the throughput of the CPU.

- A GPU is more specialized, using smaller cores that are able to perform similar calculations at the same time. This makes a GPU great for parallelization, as the throughput is high, but the smaller cores mean that a GPU lacks the low latency that a CPU offers.

- A CPU is more like a general purpose chip, while the GPU is more specialized

- A CPU consists of fewer cores, while a GPU can have hundreds

- A CPU is good at serial processing, while a GPU is good at parallel processing

- A CPU offers low latency, while a GPU offers high throughput

GPU vs CPU for Machine Learning

A CPU is not regarded as the main choice for data-intensive machine learning processes like training, as it is (most of the time) not the most efficient option when compared to a GPU. However, some of these processes or algorithms do not require the parallelization power that a GPU offers. For example: a model that runs on time series data, or a recurrent neural network that uses sequential data. In these cases the CPU would be the most cost-efficient option. Additionally, some machine learning operations like data preprocessing are better suited for CPUs, as these tasks require the sequential power of a CPU instead of the parallelisation power of the GPU.

Typically GPUs are more expensive than a CPU. This is in large part because of the fact that in most cases a GPU is a more complicated device than a CPU. NVIDIA GPUs based on the Pascal structure use 16 billion transistors, while Intel CPU servers run on 5 billion transistors and a consumer grade CPU uses less than a billion transistors. As such, the power consumption of a GPU is higher than that of a CPU, which means that the operational costs of a GPU are higher than that of a CPU too.

In some cases the usage of a GPU does not result in a massive speed increase when looking at an entire operation. In the past, a colleague of mine compared the speed of running an ONNX model on both a CPU and GPU. The results were interesting: the GPU was almost twice as fast at inferencing but the total request time, which includes file uploading/downloading and other overhead, differed by less than 0.02 seconds. This confirms what we already knew: using a GPU for inferencing or training can result in massive performance gains, sometimes even higher than a factor of 60, but it doesn’t necessarily speed up the entire operation.

In general, Deep learning algorithms need massive amounts of data for training. These larger training sets come with a larger number of computational operations. These computations are mathematically very similar to the calculations required for image manipulation, the ones that a GPU was designed to execute. As such, deep learning algorithms are very well suited for GPUs since they are able to exploit the higher parallelization power. The larger the dataset that is used for training, the greater the advantage will be of using a GPU as opposed to a CPU in terms of speed of execution.

In this article a convolutional neural network (CNN) was trained on the CIFAR-10 dataset. The results show while a GPU is more expensive to run, opposed to a CPU, the training time is reduced massively:

| Type | Effective batch size* | Training duration (s) | Time per epoch (s) | Unit cost (€/h) | Total training cost (€) |

| GPU | 50 | 443 | 08.6 | From €4 | 29.53 |

| GPU | 8 | 2616 | 51.7 | From €4 | 174.40 |

| CPU | 50 | ~5 hours | 330 | From €1.3 | 6.5 |

| CPU | 4 | 10+ hours | 10+ minutes | From €1.3 | 13 |

In short:

- A CPU is not the preferred choice for data intensive processes

- GPUs are more complicated than CPU, which is why they are more expensive

- A GPU does not always guarantee a speed boost when used for all processes

- A GPU only benefits when the data can be handled in parallelisation

- Deep learning benefits massively from the use of GPUs

Saving costs with on-demand GPUs

Nowadays GPUs are also available in the cloud. Cloud GPUs or on-demand GPUs eliminate the need of acquiring an on-premise GPU. While using on-demand GPUs may sound more expensive than acquiring your own GPU, in practice this is not often the case. Let’s dive deeper into the advantages and disadvantages of using an on-premises GPU as opposed to a cloud GPU.

On-premise GPU

Of course, using an on-premise GPU, or buying a GPU can be a big upfront investment since the GPU itself requires an infrastructure to be used efficiently. GPUs are known to produce a lot of heat and require a lot of electricity to perform optimally. The generated heat and consumed power differ per use case, of course, but you can assume that a GPU needs a good cooling system with a sufficient power supply to run effectively. Costs of these systems for both can reach hundreds if not thousands of dollars. Furthermore, servers are used to accommodate the GPU and its infrastructure. These servers need to have the necessary slots and power capacity for the GPU. If you’re using a high end GPU for training and inferencing combined with a low end or mid range CPU that preprocesses the data, for example, you might end up in the situation where your CPU is not able to keep up with your GPU. As a result your GPU will be starved for data, as it needs to wait for the CPU to finish processing it. NVIDIA has written out a guide for choosing a server for deep learning training, listing all the requirements a GPU server needs to meet.

Making use of on-demand GPUs means that your data also needs to be processed and stored in the cloud, where it could be at risk of data breaches of cyberattacks. Of course, having an on-premise GPU does not guarantee that your data is 100% safe. But an on-premise GPU at least gives you full control of your infrastructure and data storage.

On-demand GPUs

Making use of on-demand GPUs saves you the up-front investment for all hardware. This is one of the reasons why on-demand GPUs are very well suited to start-ups: not only are they able to use high end GPUs without having to invest in the hardware, it’s also easier to scale up or down depending on the demand.

Cloud providers require you to reserve GPU resources, which can result in overpaying as the graph below shows. UbiOps is a company that only charges you for your actual computing needs, resulting in never paying too much for your GPU usage. UbiOps also makes sure that there is enough on-demand GPU availability for you to scale up instantly, with a high throughput and 99.99% uptime.

What GPU is best for you?

Deciding which GPU is best for your use case is a difficult process, as a lot of choices need to be made. The first choice you need to make is whether to go with an AMD graphics card or a NVIDIA graphics card..

For most larger libraries, AMD requires you to use an additional tool (ROCm), and in some cases you would need to use an older version of PyTorch or Tensorflow to keep your card working due to a lack of support. NVIDIA cards also have access to CUDA, which refers to two things: the massive parallel architecture of hundreds of cores and the CUDA parallel programming model. The latter helps to program all the cores efficiently. Most newer NVIDIA cards are designed specifically for machine learning, like the newer 40-series and 30-series. These cards come equipped with Tensor Cores, which are like CUDA cores but more specialized. CUDA cores are optimized for a wide range of tasks, while Tensor Cores are optimized for accelerating deep learning and AI workloads. Note that support for AMD cards will improve in the future, but at the time of writing this page NVIDIA is the clear winner between the two brands.

Do keep in mind that the support for AMD cards will improve in the future.

Now it’s time to dive a bit deeper into the features you want for your GPU. The most important feature of a GPU is its RAM, or “memory”, which is also called VRAM for GPUs. As mentioned before, GPUs are all about having a high throughput, and the throughput of a GPU is dependent on the VRAM. VRAM is particularly important if you’re working with large amounts of data – think audio, images or videos. 4GB of RAM is considered to be the absolute minimum, and with this amount of RAM you’re able to work with not too complicated models. Deep learning models, for example, will be a stretch for this amount of VRAM but anything less complicated will run fine. 12GB of RAM is considered to be the best value for money, as it is sufficient to cope with most larger models that deal with videos and images but it will not break the bank. Of course, if your budget allows it you can get a GPU that has even more VRAM.

The next thing to consider is the number of cores. The more cores a GPU has, the more tasks it can handle simultaneously, and thus can compute faster. As mentioned before, NVIDIA cards are equipped with CUDA cores and Tensor Cores. CUDA cores are the more generalized ones and were designed to optimize the efficiency for a range of tasks, while Tensor Cores were designed specifically for the optimization of machine learning and deep learning tasks.

Another thing to consider is the compute capability of the GPU. The compute capability is an indication of the computing power of a GPU and is defined by the hardware features of the GPU (the number of cores, for example). The compute capability of a GPU is linked to the architecture that it is built on and determines what features you can use. If you want to use Tensorflow with GPU acceleration, for example, you will need to have a GPU with a compute capability of 3.5 or higher.

Another metric to keep an eye on is the Thermal Design Power (TDP), which NVIDIA defines as follows:

“TDP is the power that a subsystem is allowed to draw for a “real world” application, and also the maximum amount of heat generated by the component that the cooling system can dissipate under real-world conditions.”

The TDP is an indication of how much power your GPU can draw in a real-world scenario. In general, the higher the TDP of a GPU, the higher the power consumption, the higher the operational costs. This is shown in the excel sheet below, where the TDP is defined as ENERGY: